Amazon S3 is a simple, reliable, and cost-effective object store that provides nearly endless capacity to safely store data in the cloud. Its flexibility allows users the ability to not only persist data ranging from bytes to petabytes, but also consume it via a myriad of tools like Amazon Athena and Qubole.

Pricing

Amazon S3 pricing is based on two factors: The amount of data stored in and location (region) of your Amazon S3 bucket.

To learn more about pricing, refer to Amazon’s S3 pricing page. Note: Remember to select the correct region to view accurate pricing.

Setup info

High-level details about Stitch’s implementation of Amazon S3, such as supported connection methods, availability on Stitch plans, etc.

Replication

Details about Stitch and replication for Amazon S3 destinations.

| Incremental Replication - Upserts |

Unsupported Stitch’s Amazon S3 destination doesn’t currently support upserts, or updates to existing rows. When Stitch loads data into Amazon S3 destinations, it will load it in an append-only fashion. This means that new records and updates to existing records are appended to the end of tables as new rows. Existing records are never updated. More info and examples can be found in the Loading section and Append-Only querying guide. |

| Primary Keys |

Unsupported Amazon S3 destinations do not have native support for Primary Keys. |

| Nested data structure support |

Depends Depending on the data storage format you select, nested data structures may be kept intact or flattened into relational objects:

Refer to the Schema section for more info and examples of how data will be stored in each format. |

| Incompatible sources |

No reported incompatibilities No integration compatibility issues have been reported for Amazon S3. |

Replication process overview

A Stitch replication job consists of three stages: Extraction, Preparation, and Loading.

Data extraction

During the Extraction phase, Stitch will check for structural changes to your data, query for data according to the integration’s replication settings, and extract the appropriate data.

Replication settings include the integration’s Replication Schedule, the data set to replicate, and the selected tables’ Replication Methods.

Note: Because Stitch’s Incremental Replication Method is inclusive, a single row will be replicated for every Incremental table even if there’s no new or updated data. Refer to the Replication Methods documentation for an explanation and examples.

Data preparation/transformations for Amazon S3

During the Preparation phase, Stitch applies some light transformations to the extracted data to ensure compatibility with the destination.

The transformations Stitch performs depends on the selected data storage format (CSV or JSON). Aside from these transformations, the data loaded into Amazon S3 is in its raw form. Refer to the Schema section for more info and examples.

| CSV | JSON |

|---|---|

|

|

Loading data into Amazon S3

During Loading, Stitch loads the extracted data into the destination. For Amazon S3 destinations, data is loaded in an Append-Only fashion.

This means that:

- A new CSV or JSON file for every table replicated is created during each load

- Existing records - that is, records already in the bucket - are never updated

- Data will not be de-duped, meaning that multiple versions of the same record may exist in the data warehouse

Because of this loading strategy, querying may require a different strategy than usual. Using some of the system columns Stitch inserts into tables will enable you to locate the latest version of a record at query time. Refer to the Querying Append-Only Tables documentation for more info.

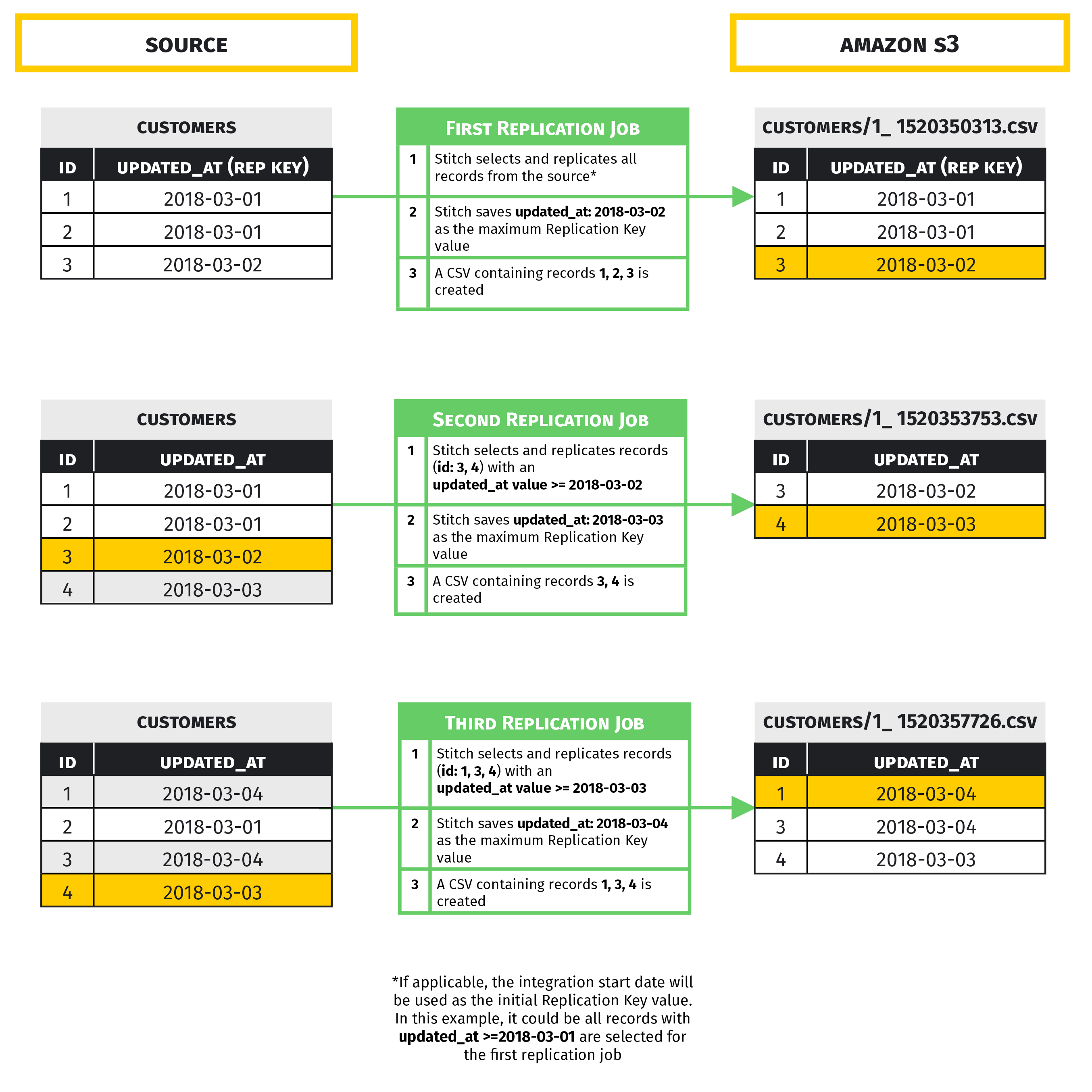

Example: Key-based Incremental Replication

Below is an example of how tables using Key-based Incremental Replication will be loaded into Amazon S3:

Schema

The file structure of your integrations’ data in your Amazon S3 bucket depends on two destination parameters:

- The definition of the Object Key, and

- The selected data storage format (CSV or JSON)

Object Keys and file structure

Amazon S3 uses what is called an Object Key to uniquely identify objects in a bucket. During the Stitch setup process, you have the option of using our default Object Key or defining your own using a handful of Stitch-approved elements. Refer to the Amazon S3 Setup instructions for more info on the available elements.

The S3 Key setting determines the convention Stitch uses to create Object Keys when it writes to your bucket. It also defines the folder structure of Stitch-replicated data.

Below is the default Key and two examples of an Object Key that an integration named salesforce-prod might produce:

/* Default Key */

[integration_name]/[table_name]/[table_version]_[timestamp_loaded].[csv|jsonl]

/* Example Object Keys */

- salesforce-prod/account/1_1519235654474.[csv|jsonl]

- salesforce-prod/opportunity/1_1519327555000.[csv|jsonl]

As previously mentioned, the S3 Key also determines the folder structure of replicated data. In the AWS console, the folder structure for the salesforce-prod integration would look like the following:

.

└── salesforce-prod

└── account

| └── 1_1519235654474.[csv|jsonl]

└── opportunity

| └── 1_1519327555000.[csv|jsonl]

└──

└── 1_[timestamp].jsonl

└── 1_[timestamp].jsonl

####

For every integration you connect, an folder will be created in the integration's directory in Amazon S3. acts as a log for records rejected during the loading process. For every load where a rejection occurs, a .jsonl file containing data about the rejection will be placed in the `` folder.

Data storage formats

Stitch will store replicated data in the format you select during the initial setup of Amazon S3. Currently Stitch supports storing data in CSV or JSON format for Amazon S3 destinations.

The tabs below contain an example of raw source data and how it would be stored in Amazon S3 for each data storage format type.

{

"contacts":[

{

"id":2078178,

"name":"Bubblegum",

"phone_numbers":[

{

"mobile":"0987654321",

"work":"7896541230"

}

],

"personas":[

{

"id":"persona_1",

"type":"Princess"

},

{

"id":"persona_2",

"type":"Heroine"

}

],

"updated_at":"2018-01-01T00:59:16Z"

}

]

}

Top-level Table

In Amazon S3, this data would create a file named contacts/1_[timestamp].csv, which would look like this:

| id | name | phone_numbers__mobile | phone_numbers__work | updated_at |

| 2078178 | Bubblegum | 0987654321 | 7896541230 | 2018-01-01T00:59:16Z |

While objects (like phone_numbers) will be flattened into the table, arrays are handled differently.

Subtables

Arrays will be de-nested and flattened into subtables. In this example, the name of the file would be contacts/personas/1_[timestamp].csv:

| _sdc_source_key_ id | _sdc_level_0_id | id | type |

| 2078178 | 0 | persona_1 | Princess |

| 2078178 | 1 | persona_2 | Heroine |

For more info and examples on how Stitch flattens nested data structures, refer to the Nested Data Structures guide.

With the exception of the _sdc columns, Stitch will store replicated data intact as .jsonl files. In this example, the name of the file would be contacts/1_[timestamp].jsonl:

{

"id":2078178,

"name":"Bubblegum",

"phone_numbers":[

{

"mobile":"0987654321",

"work":"7896541230"

}

],

"personas":[

{

"id":"persona_1",

"type":"Princess"

},

{

"id":"persona_2",

"type":"Heroine"

}

],

"updated_at":"2018-01-01T00:59:16Z",

"_sdc_extracted_at":"2018-01-01T01:10:53Z",

"_sdc_received_at":"2018-01-01T01:10:53Z",

"_sdc_batched_at":"2018-01-01T01:11:04Z",

"_sdc_table_version":0,

"_sdc_sequence":1514769053000

}

Limitations

Details about data limitations imposed by Amazon S3 destinations.

| Max record size |

4 MB The maximum record size Stitch can accept and load into Amazon S3 destinations. Records that exceed this limit will be rejected. Rejected columns are logged in the |

Compare destinations

Not sure if Amazon S3 is the data warehouse for you? Check out the Choosing a Stitch Destination guide to compare each of Stitch’s destination offerings.

| Related | Troubleshooting |

Questions? Feedback?

Did this article help? If you have questions or feedback, feel free to submit a pull request with your suggestions, open an issue on GitHub, or reach out to us.